This test is important but has a number of problems in implementation. To understand the issue and solution clearly, the situation is discussed in three stages - the ECG design aspect the standard is trying to confirm; the problems with the test in the standard; and finally a proposed solution.

The ECG design issue

Virtually all ECGs will apply some opamp gain prior to the high pass filter which removes the dc offset. This gain stage has the possibility to saturate with high dc levels. The point of saturation varies greatly with each manufacturer, but is usually in the range of 350 - 1000mV. At the patient side a high dc offset is usually caused by poor contact at the electrode site, ranging from an electrode that is completely disconnected through to other issues such as an old gel electrode.

Most ECGs detect when the signal is close to saturation and trigger a "Leads off" or "Check electrodes" message to the operator. Individual detection needs to be applied to each lead electrode, and both positive and negative voltages, this means that there are up to 18 different points (LA, RA, LL, V1 - V6). Due to component tolerances, the points of detection in each lead often vary by around 20mV (e.g. LA points might be +635mV, -620mV, V3 might be +631mV, -617mV etc).

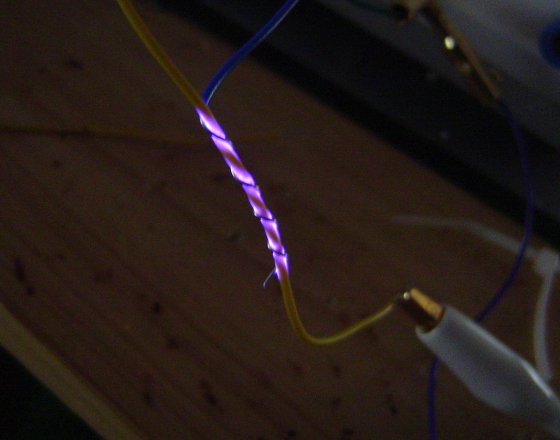

If the signal is completely saturated it will appear as a flat-line on the ECG display. However, there is a small region where the signal is visible, but distorted (see Figure 1). Good design ensures the detection occurs prior to any saturation. Many ECGs automatically show a flat line once the "Leads Off" message is indicated, to avoid displaying a distorted signal.

Problems with the standard

The first problem is the use of a large ±5V offset. This is a conflict with the standard as Clause 201.12.4.105.2 states that ECGs only need to withstand up to ±0.5V without damage. Modern ECGs use ±3V or less for the internal amplifiers, and applying ±5V could unnecessarily damage the ECG.

This concern also applies to the test equipment (Figure 201.106). If care is not taken, the 5V can easily damage the precision 0.1% resistors in the output divider and internal DC offset components.

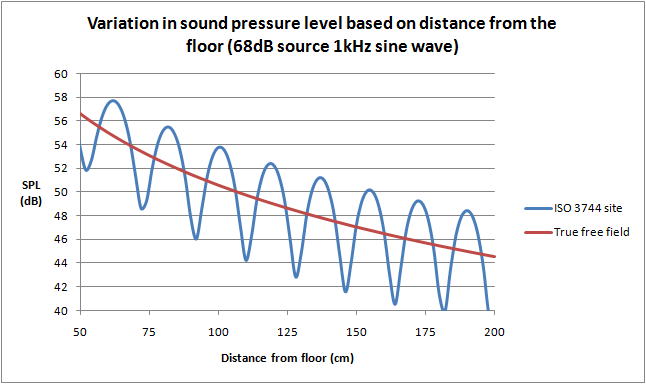

Next, the standard specifies that the voltage is applied in 1V steps. This means it is possible to pass the test even though equipment fails the requirement. For example an ECG may start to distort at +500mV, flatline by +550mV, but the designer accidentally sets the "Leads Off" signal at +600mV. In the region of 500-550mV this design can display a distorted signal without any indication, and from 550-600mV is confusing to the operator why a flat line appears. If tested with 1V steps these problem regions would not be detected and a Pass result would be recorded.

Finally the standard allows distortion up to 50% (a 1mV signal compressed to 0.5mV). This is a huge amount of distortion and there no technical justification to allow this given the technology is simple to ensure a "Leads Off" message appears well before any distortion. The standard should simply keep the same limits for normal sensitivity (±5%).

Solution

In practice, it is recommended that a test engineer start at 300mV offset and search for the point where the message appears, reduce the offset until the message is cleared, and then slowly increase again up to the point of message while confirming that no visible distortion occurs (see Figure 2). The test should be performed in both positive and negative directions, and on each lead electrode (RA, LA, LL, V1 to V6). The dc offset function in the Whaleteq SECG makes this test easy to perform (test range up to ±1000mV), but the test is also simple enough that an ad-hoc set up is easily prepared.

Due to the high number of tests, it might be temping to skip some leads on the basis that some are representative. Unfortunately, experience indicates that manufacturers sometimes deliberately or accidentally miss some points on some leads, or set the operating point to the wrong level, such that distortion is visible prior to the message appearing. As such it is recommended that all lead electrodes are checked. Test engineers can opt for a simple OK/NG record, with the operating points on at least one lead kept for reference. Detailed data on the other leads might be kept only if they are significantly different. For example, some ECGs have very different trigger points for chest leads (V1 - V6).

Due to the nature of electronics, any detectable distortion prior to the "Leads Off" message should be treated with concern, since the point of op-amp saturation is variable. For example one ECG may have 10% distortion at +630mV while sample might have 35% distortion. Since some limit should apply (it is impossible to detect "no distortion") It is recommended to use a limit of ±5% relative to a reference measurement taken with no dc offset.

The right leg

The right leg is excluded from the above discussion: in reality the right leg is the source of dc offset voltage - it provides feedback and attempts to cancel both ac and dc offsets; an open lead or poor electrode causes this feeback to drift towards the internal rail voltage (typically 3V in modern systems). This feedback is via a large resistor (typically 1MΩ) so there is no risk of damage (hint to standards committees - if 5V is really required, it should be via a large resistor).

More research is needed on the possibility, effects and test methods for RL. It is likely that high dc offsets impact CMRR, since if the RL drive is pushed close to rail voltage, it will feedback a distorted signal preventing proper noise cancellation. At this time, Figure 201.106 is not set up to allow investigation of an offset to RL while providing signals to other electrodes which is necessary to detect distortion. For now, the recommendation is to test RL to confirm that at least an indication is provided, without confirming distortion.

Figure 1: With dc offset, the signal is at first completely unaffected, before a region of progressive distortion is reached finally ending in flat line on the ECG display. Good design ensures the indication to the operator (e.g. "LEADS OFF") appears well before any distortion

Figure 2: The large steps in the standard fail to verify that the indication to the operator appears before any distortion. Values up to 5V can also be destructive for the ECG under test and test equipment.

Figure 3; Recommended test method; search for the point when the message is displayed, reduce until message disappears, slowly increase again check no distortion up to the message indication. Repeat for each lead electrode and both + and - direction.