This article has been transferred from the original MEDTEQ website with minor editorial update.

~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

The mechanism of breakdown at high frequency is different to normal mains frequency dielectric strength tests - it can be thermal, rather than basic ripping apart of electrons from atoms. Also, for mains frequency tests, there tends to be a large margin between the test requirement and what the insulation can really handle, meaning that errors in the test method are not always critical. In contrast, the margin for HF insulation can be slight, and the test method can greatly affect the test result.

HF burns, in part due to insulation failure, continue to be a major area of litigation. Of particular concern is the high fatality rate associated with unintentional internal burns which may go unnoticed.

For those involved in designing or testing HF insulation, it is absolutely critical to have a good understanding of the theory behind HF insulation and what causes breakdown. This article looks into the detail of one of those mechanisms: thermal effects.

Theory

All insulating materials behave like capacitors. With an ac voltage applied, some current will flow. At 230V 50/60Hz, this amount of current is very small, in the order of 20uA between conductors in a 2m length of mains cable. But at 300-400kHz, the current is nearly 10,000 times higher, easily reaching in the order of 10mA at 500Vrms, for just short 10cm of cable.

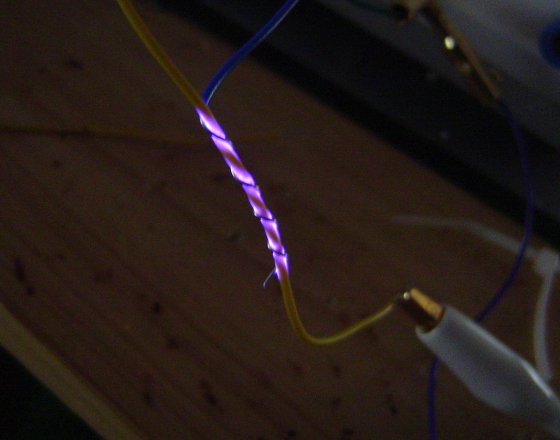

All insulating materials will heat up due to the ac electric field. This is called dielectric heating or dipole heating. One way to think of this is to consider the heating to be due to the friction of molecules moving in the electric field. Microwave ovens use this property to heat food, and dielectric heating is used also in industrial applications such as welding plastics. These applications make use of high frequency, usually in the MHz or GHz range.

At 50-60Hz the amount of heating is so small it amounts to a fraction of a fraction of a degree. But again at 300-400kHz the amount of heating can be enough to melt the insulation.

The temperature rise caused by dielectric heating can be estimated from:

dT = 2π V2 f ε0εr d t / H D d2 (K or °C)

Although this is a rather complicated looking formulae, it is mostly made up of material specific parameters that can be found with some research (more details on this are provided below). To get some feel for what this means, let's put this in a table where voltage and thickness are varied, for a frequency of 400kHz, showing two common materials, PVC and Teflon:

Predicted insulation temperature rise @ 400kHz

| Voltage | PVC Insulation Thickness (mm) | ||||

| (Vrms) | 1 | 0.8 | 0.6 | 0.4 | 0.2 |

| Temperature rise (K) | |||||

| 200 | 0.7 | 1.1 | 1.9 | 4.3 | 17.3 |

| 400 | 2.8 | 4.3 | 7.7 | 17.3 | 69.3 |

| 600 | 6.2 | 9.7 | 17.3 | 39.0 | 156.0 |

| 800 | 11.1 | 17.3 | 30.8 | 69.3 | 277.3 |

| 1000 | 17.3 | 27.1 | 48.1 | 108.3 | 433.3 |

| 1200 | 25.0 | 39.0 | 69.3 | 156.0 | 623.9 |

Table #1: Because of a high dissipation factor (d = 0.016), PVC can melt at thicknesses commonly found in insulation.

For broad HF surgical applications, a thickness of at least 0.8mm is recommended

| Voltage | Teflon Insulation Thickness (mm) | ||||

| (Vrms) | 0.5 | 0.3 | 0.1 | 0.05 | 0.03 |

| Temperature rise (K) | |||||

| 200 | 0.0 | 0.1 | 0.5 | 2.1 | 5.9 |

| 400 | 0.1 | 0.2 | 2.1 | 8.5 | 23.7 |

| 600 | 0.2 | 0.5 | 4.8 | 19.2 | 53.4 |

| 800 | 0.3 | 0.9 | 8.5 | 34.2 | 94.9 |

| 1000 | 0.5 | 1.5 | 13.3 | 53.4 | 148.3 |

| 1200 | 0.8 | 2.1 | 19.2 | 76.9 | 213.5 |

Table #2: Teflon has a far lower dissipation factor (less than 0.0002), so even 0.1mm is enough for broad HF surgical applications.

However, because of Teflon's superior qualities and high cost, insulation thickness is often reduced to around the 0.1mm region

For PVC insulation, these predicted values match well with experimental tests, where a small gauge thermocouple was used as the negative electrode and the temperature monitored during and after the test. For insulation with thickness varying between 0.3mm and 0.5mm, temperatures of over 80°C were recorded at voltages of 900Vrms 300kHz, and increasing the voltage to 1100Vrms resulted in complete breakdown.

Practical testing

As the formulae indicates, the temperature rise is a function of voltage squared, and an inverse function of thickness squared. This means for example, if the voltage is doubled, or the thickness is halved, the temperature rise quadruples. Even smaller variations of 10-20% can have a big impact on the test result due the squared relation.

Because insulation thickness varies considerably in normal wiring, it is possible that one sample may pass while another may not. Although IEC 60601-2-2 and IEC 60601-2-18 do not require multiple samples to be tested, good design practice would dictate enough samples to provide confidence, which in turn depends on the margin. For example, if your rated voltage is only 400Vrms, and your thickness is 0.8+/- 0.2mm, then high margin means the test is only a formality. On the other hand, if your rating is 1200Vrms, and the thickess if 0.8+/-0.2mm, perhaps 10 samples would be reasonable.

Test labs need to take care that the applied voltage is accurate and stable, which is not an easy task. Most testing is performed using HF surgical equipment as the source, however, these often do not have a stable output. Also, the measurement of voltage at HF is an area not well understood. In general, passive HV probes (such as 1000:1 probes) should not be used, since at 400kHz these probes operate in a capacitive region in which calibration is no longer valid (see here for more discussion) and large errors are common. Specially selected active probes or custom made dividers which have been validated at 400kHz (or the frequency of interest) are recommended.

Perhaps the biggest impact to the test result is heat sinking. The above formulae for temperature rise assumes that all the heat produced cannot escape. However, the test methods described in IEC 60601-2-2 and IEC 60601-2-18 do not require the test sample to be thermally insulated. This means, some or most of the heat will be drawn away by the metal conductors on either side of the insulation, by normal convection cooling if the sample is tested in an open environment, or by the liquid if the sample immersed in fluid or wrapped in a saline soaked cloth.

This heat sinking varies greatly with the test set up. The test in IEC 60601-2-2 (wire wrap test) is perhaps the most severe, but even something as simple as the test orientation (horizontal or vertical) is enough to substantially affect the test result.

Because of these three factors (variations in insulation thickness, applied voltage, heatsinking) bench testing of HF insulation should only be relied on as a back up to design calculations. Test labs should ask the manufacturer for the material properties, and then make a calculation whether the material is thermally stable at the rated voltage and frequency.

The above formulea is again repeated here, and the following table provides more details on the parameters needed to estimate temperature rise. The temperature rise should be combined with ambient (maybe 35°C for the human body) and then compared to the insulation's temperature limit.

dT = 2π V2 f ε0εr d t / H D d2 (K or °C)

| Symbol | Parameter | Units | Typical value | Notes |

| V | Test voltage | Vrms | 600 - 1200Vrms |

Depends on rating and test standard. Note that ratings with high peak or peak to peak values may still have moderate rms voltages. Under IEC 60601-2-2, a rating of 6000Vp would require a test with 1200Vrms. |

| f | Test frequency | Hz | 300-400kHz | Depends on rating. Monopolar HF surgical equipment is usually less than 400kHz1. |

| ε0 | Free space permittivity | F/m | 8.85 x 10-12 | Constant |

| εr | Relative permittivity | unit less | ~2 | Does not vary much with materials |

| δ | Dissipation factor | unit less | 0.0001 ~ 0.02 | Most important factor, varies greatly with material. Use the 1MHz figures (not 1kHz) |

| t | Test time | s | 30s | IEC 60601-2-2 and IEC 60601-2-18 both specify 30s |

| H | Specific heat | J/gK | 0.8 ~ 1 | Does not vary much with materials |

| D | Density | g/cm3 | 1.4 ~ 2 | Does not vary much with materials |

| d | Insulation thickness | mm | 0.1 ~ 1 | Based on material specification. Use minimum value |

1Dielectric heating also occurs in bipolar applications, but due to the significantly lower voltage, the effect is much less significant.